Stacking switches Part - IV (Aruba CX Switch VSX - Virtual Switching Extension)

There is a new switching family released by HPE under the brand name Aruba CX series switches. These switches supports stacking in the form of MLAG. Today we will look at how to configure MLAG (multi chassis link aggregation) between two Aruba CX series switches. Aruba calls their implementation of mlag - Virtual Switching Extension (VSX).

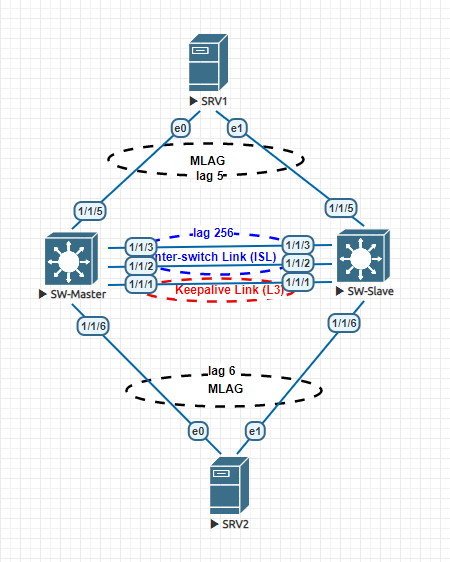

Let's look at our network topology -

- Two Aruba CX switches will run peering between them and will form MLAG.

- Two Debian 10 linux machine will form multichassis link-aggregation with Aruba switches. They will simulate the client connection.

Our topology looks like below -

|

| Aruba CX MLAG Topology |

Let's have a look at some terminology first before start configuring VSX. We need to define one switch as Master and another switch as Slave. VSX is an active-active forwarding solution, but the roles of master and slave is required. The purpose of that is during a VSX split, only the master will forward traffic and it will determine the direction of configuration sync. Stay tuned about configuration-sync later on this article. This is one of the useful feature provided by VSX MLAG setup.

ISL ports (Inter-Switch Link) are the ports through which the member switches of the fabric communicates with each other, passes VSX protocol packets and synchronizes with each other. It is always suggested to use at least two ports from individual members for redundancy.

ISL ports (Inter-Switch Link) are the ports through which the member switches of the fabric communicates with each other, passes VSX protocol packets and synchronizes with each other. It is always suggested to use at least two ports from individual members for redundancy.

Keepalive Link is a layer 3 interface that is used to exchange heartbeats between VSX peer switches. These heartbeat packets are exchanged over UDP port 7678 by default. In the event of an ISL failure, the switches use their keepalive link to find out if both VSX switches are up and running. Which in turn helps the VSX switches find an alternative paths to the ISL link so that they can continue to stay in sync.

Enough talking; let start configuring -

Enough talking; let start configuring -

ISL link configuration

First we will configure the ISL ports. As we are using two ports for ISL and we will configure ISL ports in a LACP trunk.

First we will configure the ISL ports. As we are using two ports for ISL and we will configure ISL ports in a LACP trunk.

interface lag 256

description ISL-Lag

no shutdown

no routing

vlan trunk native 1

vlan trunk allowed all

lacp mode active

interface 1/1/2

no shutdown

lag 256

interface 1/1/3

no shutdown

lag 256

no routing

vlan trunk native 1

vlan trunk allowed all

lacp mode active

interface 1/1/2

no shutdown

lag 256

interface 1/1/3

no shutdown

lag 256

Above we have configured an aggregated (layer 2) interface numbered 256 as ISL interface and assigned two physical ports to it.

Let's verify the status of our LAG interface from SW-Master -

SW-Master# show lacp interfaces

State abbreviations :

A - Active P - Passive F - Aggregable I - Individual

S - Short-timeout L - Long-timeout N - InSync O - OutofSync

C - Collecting D - Distributing

X - State m/c expired E - Default neighbor state

Actor details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr Forwarding

Name Id Pri Pri Key State

------------------------------------------------------------------------------

1/1/2 lag256 3 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

1/1/3 lag256 4 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

Partner details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr

Name Id Pri Pri Key

------------------------------------------------------------------------------

1/1/2 lag256 3 1 ALFNCD 08:00:09:03:9e:01 65534 256

1/1/3 lag256 4 1 ALFNCD 08:00:09:03:9e:01 65534 256

SW-Master# show lacp aggregates lag256

Aggregate name : lag256

Interfaces : 1/1/3 1/1/2

Heartbeat rate : Slow

Hash : l3-src-dst

Aggregate mode : Active

VSX Configuration

Now we will configure the VSX between switches.

In "SW-Master" we run -

Let's verify the status of our LAG interface from SW-Master -

SW-Master# show lacp interfaces

State abbreviations :

A - Active P - Passive F - Aggregable I - Individual

S - Short-timeout L - Long-timeout N - InSync O - OutofSync

C - Collecting D - Distributing

X - State m/c expired E - Default neighbor state

Actor details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr Forwarding

Name Id Pri Pri Key State

------------------------------------------------------------------------------

1/1/2 lag256 3 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

1/1/3 lag256 4 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

Partner details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr

Name Id Pri Pri Key

------------------------------------------------------------------------------

1/1/2 lag256 3 1 ALFNCD 08:00:09:03:9e:01 65534 256

1/1/3 lag256 4 1 ALFNCD 08:00:09:03:9e:01 65534 256

SW-Master# show lacp aggregates lag256

Aggregate name : lag256

Interfaces : 1/1/3 1/1/2

Heartbeat rate : Slow

Hash : l3-src-dst

Aggregate mode : Active

VSX Configuration

Now we will configure the VSX between switches.

In "SW-Master" we run -

vsx

inter-switch-link lag 256

role primary

inter-switch-link lag 256

role primary

In "SW-Slave" we run -

vsx

inter-switch-link lag 256

role secondary

Now we will verify the status of VSX from SW-Master -

SW-Master# sh vsx status

VSX Operational State

---------------------

ISL channel : In-Sync

ISL mgmt channel : operational

Config Sync Status : in-sync

NAE : peer_reachable

HTTPS Server : peer_reachable

Attribute Local Peer

------------ -------- --------

ISL link lag256 lag256

ISL version 2 2

System MAC 08:00:09:e9:60:52 08:00:09:03:9e:01

Device Role primary secondary

role secondary

Now we will verify the status of VSX from SW-Master -

SW-Master# sh vsx status

VSX Operational State

---------------------

ISL channel : In-Sync

ISL mgmt channel : operational

Config Sync Status : in-sync

NAE : peer_reachable

HTTPS Server : peer_reachable

Attribute Local Peer

------------ -------- --------

ISL link lag256 lag256

ISL version 2 2

System MAC 08:00:09:e9:60:52 08:00:09:03:9e:01

Device Role primary secondary

At this point we are done with our VSX configuration, the switches are ready to run MLAG interfaces.

MLAG configuration and synchronization

interface lag 5 multi-chassis --MLAG

description Mlag-Srv1

no shutdown

no routing

lacp mode active

Note: We do not need to configure any keepalive interface for an operational VSX environment. But having a keepalive interface is recommended during a split-brain situation, the switches will use that keepalive interface to communicate with each other.

MLAG configuration and synchronization

We will create two MLAG interfaces in every switch which connects to Srv1 and Srv2; two debian 10 machines with two network cards which are also configured for LACP. As this is not a example of configuring link-aggregation on Linux, I will just briefly describe it and show verification commands in Linux.

In "SW-Master" we run -

In "SW-Master" we run -

interface lag 5 multi-chassis --MLAG

description Mlag-Srv1

no shutdown

no routing

lacp mode active

!!! Activation of synchronization which makes sure vlan related changes synchronized from Primary to Secondary switch automatically vsx-sync vlans

interface lag 6 multi-chassis

description Mlag-Srv2

no shutdown

no routing

lacp mode active

vsx-sync vlans

interface 1/1/5

description Srv1

no shutdown

lag 5

interface 1/1/6

description Srv2

no shutdown

lag 6

In "Sw-Slave" we run -

interface lag 5 multi-chassis

description Mlag-Srv1

no shutdown

no routing

lacp mode active

interface lag 6 multi-chassis

description Mlag-Srv2

no shutdown

no routing

lacp mode active

interface 1/1/5

description Srv1

no shutdown

lag 5

interface 1/1/6

description Srv2

no shutdown

lag 6

In "Sw-Master" we configure below -

vlan 101

name Server-Vlan

vsx-sync

interface lag 5 multi-chassis

vlan access 101

interface lag 6 multi-chassis

vlan access 101

description Mlag-Srv2

no shutdown

no routing

lacp mode active

vsx-sync vlans

interface 1/1/5

description Srv1

no shutdown

lag 5

interface 1/1/6

description Srv2

no shutdown

lag 6

In "Sw-Slave" we run -

interface lag 5 multi-chassis

description Mlag-Srv1

no shutdown

no routing

lacp mode active

interface lag 6 multi-chassis

description Mlag-Srv2

no shutdown

no routing

lacp mode active

interface 1/1/5

description Srv1

no shutdown

lag 5

interface 1/1/6

description Srv2

no shutdown

lag 6

The configuration commands are same in both switches; except one exception - "vsx-sync vlans" in SW-Master. And this is one of the noteworthy feature of Aruba's VSX implementation and is called configuration-sync. Let's try it out -

vlan 101

name Server-Vlan

vsx-sync

interface lag 5 multi-chassis

vlan access 101

interface lag 6 multi-chassis

vlan access 101

After that if we look at the configuration of the "Sw-Slave", we will see that vlan 101 and it's related configuration is synchronized automatically to our slave switch. In other vendors implementation, we need to configure these vlan settings individually in each member switch.

SW-Slave# sh run (only relevant commands are shown)

vlan 101

name Server-Vlan

vsx-sync

interface lag 5 multi-chassis

vsx-sync vlans

no shutdown

description Mlag-Srv1

no routing

vlan access 101

lacp mode active

interface lag 6 multi-chassis

vsx-sync vlans

no shutdown

description Mlag-Srv2

no routing

vlan access 101

lacp mode active

interface 1/1/5

no shutdown

lag 5

interface 1/1/6

no shutdown

lag 6

Now let's verify whether our MLAG is working or not -

SW-Master# sh lacp interfaces

State abbreviations :

A - Active P - Passive F - Aggregable I - Individual

S - Short-timeout L - Long-timeout N - InSync O - OutofSync

C - Collecting D - Distributing

X - State m/c expired E - Default neighbor state

Actor details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr Forwarding

Name Id Pri Pri Key State

------------------------------------------------------------------------------

1/1/5 lag5(mc) 5 1 ALFNCD 08:00:09:e9:60:52 65534 5 up

1/1/6 lag6(mc) 6 1 ALFNCD 08:00:09:e9:60:52 65534 6 up

1/1/2 lag256 3 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

1/1/3 lag256 4 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

Partner details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr

Name Id Pri Pri Key

------------------------------------------------------------------------------

1/1/5 lag5(mc) 1 255 ALFNCD 00:50:00:00:03:02 65535 9

1/1/6 lag6(mc) 1 255 ALFNCD 00:50:00:00:04:02 65535 9

1/1/2 lag256 3 1 ALFNCD 08:00:09:03:9e:01 65534 256

1/1/3 lag256 4 1 ALFNCD 08:00:09:03:9e:01 65534 256

From "Srv1" -

root@SRV1:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

802.3ad info

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

System priority: 65535

System MAC address: 00:50:00:00:03:02

Active Aggregator Info:

Aggregator ID: 2

Number of ports: 2

Actor Key: 9

Partner Key: 5

Partner Mac Address: 08:00:09:e9:60:52

Now we try to ping Srv2 (192.168.101.6/24) from Srv1 (192.168.101.5/24)

root@SRV1:~# ping 192.168.101.6

PING 192.168.101.6 (192.168.101.6) 56(84) bytes of data.

64 bytes from 192.168.101.6: icmp_seq=1 ttl=64 time=1.92 ms

64 bytes from 192.168.101.6: icmp_seq=2 ttl=64 time=1.90 ms

64 bytes from 192.168.101.6: icmp_seq=3 ttl=64 time=1.77 ms

64 bytes from 192.168.101.6: icmp_seq=4 ttl=64 time=1.53 ms

Keepalive Link Configuration

We will configure keepalive link first, then have a look at it use case.

In "Sw-Master" we run -

interface 1/1/1

no shutdown

description Keepalive-Link-VSX

ip address 10.1.1.1/24

vlan 101

name Server-Vlan

vsx-sync

interface lag 5 multi-chassis

vsx-sync vlans

no shutdown

description Mlag-Srv1

no routing

vlan access 101

lacp mode active

interface lag 6 multi-chassis

vsx-sync vlans

no shutdown

description Mlag-Srv2

no routing

vlan access 101

lacp mode active

interface 1/1/5

no shutdown

lag 5

interface 1/1/6

no shutdown

lag 6

Now let's verify whether our MLAG is working or not -

SW-Master# sh lacp interfaces

State abbreviations :

A - Active P - Passive F - Aggregable I - Individual

S - Short-timeout L - Long-timeout N - InSync O - OutofSync

C - Collecting D - Distributing

X - State m/c expired E - Default neighbor state

Actor details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr Forwarding

Name Id Pri Pri Key State

------------------------------------------------------------------------------

1/1/5 lag5(mc) 5 1 ALFNCD 08:00:09:e9:60:52 65534 5 up

1/1/6 lag6(mc) 6 1 ALFNCD 08:00:09:e9:60:52 65534 6 up

1/1/2 lag256 3 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

1/1/3 lag256 4 1 ALFNCD 08:00:09:e9:60:52 65534 256 up

Partner details of all interfaces:

------------------------------------------------------------------------------

Intf Aggr Port Port State System-ID System Aggr

Name Id Pri Pri Key

------------------------------------------------------------------------------

1/1/5 lag5(mc) 1 255 ALFNCD 00:50:00:00:03:02 65535 9

1/1/6 lag6(mc) 1 255 ALFNCD 00:50:00:00:04:02 65535 9

1/1/2 lag256 3 1 ALFNCD 08:00:09:03:9e:01 65534 256

1/1/3 lag256 4 1 ALFNCD 08:00:09:03:9e:01 65534 256

From "Srv1" -

root@SRV1:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

802.3ad info

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

System priority: 65535

System MAC address: 00:50:00:00:03:02

Active Aggregator Info:

Aggregator ID: 2

Number of ports: 2

Actor Key: 9

Partner Key: 5

Partner Mac Address: 08:00:09:e9:60:52

Now we try to ping Srv2 (192.168.101.6/24) from Srv1 (192.168.101.5/24)

root@SRV1:~# ping 192.168.101.6

PING 192.168.101.6 (192.168.101.6) 56(84) bytes of data.

64 bytes from 192.168.101.6: icmp_seq=1 ttl=64 time=1.92 ms

64 bytes from 192.168.101.6: icmp_seq=2 ttl=64 time=1.90 ms

64 bytes from 192.168.101.6: icmp_seq=3 ttl=64 time=1.77 ms

64 bytes from 192.168.101.6: icmp_seq=4 ttl=64 time=1.53 ms

Keepalive Link Configuration

We will configure keepalive link first, then have a look at it use case.

In "Sw-Master" we run -

interface 1/1/1

no shutdown

description Keepalive-Link-VSX

ip address 10.1.1.1/24

vsx

keepalive peer 10.1.1.2 source 10.1.1.1

In "Sw-Slave" we run -

interface 1/1/1

no shutdown

description Keepalive-Link-VSX

ip address 10.1.1.2/24

vsx

keepalive peer 10.1.1.1 source 10.1.1.2

Let's verify the keepalive is working -

SW-Master# show vsx brief

ISL State : In-Sync

Device State : Peer-Established

Keepalive State : Keepalive-Established

Device Role : primary

Number of Multi-chassis LAG interfaces : 2

SW-Master# show vsx configuration keepalive

Keepalive Interface : 1/1/1

Keepalive VRF : default

Source IP Address : 10.1.1.1

Peer IP Address : 10.1.1.2

UDP Port : 7678

Hello Interval : 1 Seconds

Dead Interval : 3 Seconds

keepalive peer 10.1.1.2 source 10.1.1.1

In "Sw-Slave" we run -

interface 1/1/1

no shutdown

description Keepalive-Link-VSX

ip address 10.1.1.2/24

vsx

keepalive peer 10.1.1.1 source 10.1.1.2

Let's verify the keepalive is working -

SW-Master# show vsx brief

ISL State : In-Sync

Device State : Peer-Established

Keepalive State : Keepalive-Established

Device Role : primary

Number of Multi-chassis LAG interfaces : 2

SW-Master# show vsx configuration keepalive

Keepalive Interface : 1/1/1

Keepalive VRF : default

Source IP Address : 10.1.1.1

Peer IP Address : 10.1.1.2

UDP Port : 7678

Hello Interval : 1 Seconds

Dead Interval : 3 Seconds

SW-Master# show vsx status keepalive

Keepalive State : Keepalive-Established

Last Established : Tue May 26 21:57:23 2020

Last Failed : Tue May 26 21:24:01 2020

Peer System Id : 08:00:09:03:9e:01

Peer Device Role : secondary

Keepalive Counters

Keepalive Packets Tx : 4080

Keepalive Packets Rx : 4057

Keepalive Timeouts : 0

Keepalive Packets Dropped : 0

Now we will shutdown the "lag256" (ISL) in "Sw-Slave".

interface lag 256

shutdown

We will have a look at the interface status -

SW-Slave# sh int brief

-------------------------------------------------------------------------------------

Port Native Mode Type Enabled Status Reason Speed

VLAN (Mb/s)

-------------------------------------------------------------------------------------

1/1/1 -- routed -- yes up 1000

1/1/2 1 trunk -- yes down Administratively down 1000

1/1/3 1 trunk -- yes down Administratively down 1000

1/1/4 -- routed -- no down Administratively down --

1/1/5 101 access -- yes down 1000

1/1/6 101 access -- yes down 1000

lag5 101 access -- yes down -- auto

lag6 101 access -- yes down -- auto

lag256 1 trunk -- no down -- auto

We will see that the physical interface 1/1/5 and 1/1/6 which are part of MLAG 5 and 6; both are down and the lag interfaces itself are also down.

SW-Slave# show vsx status

VSX Operational State

---------------------

ISL channel : Out-Of-Sync

ISL mgmt channel : inter_switch_link_down

Config Sync Status : out-of-sync

NAE : peer_unreachable

HTTPS Server : peer_unreachable

Attribute Local Peer

------------ -------- --------

ISL link lag256

ISL version 2

System MAC 08:00:09:03:9e:01 08:00:09:e9:60:52

Device Role secondary

SW-Slave# show vsx status keepalive

Keepalive State : Keepalive-Established

Last Established : Tue May 26 21:46:27 2020

Last Failed :

Peer System Id : 08:00:09:e9:60:52

Peer Device Role : -- ISL down so no peer is detected

Keepalive Counters

Keepalive Packets Tx : 1630

Keepalive Packets Rx : 1630

Keepalive Timeouts : 0

Keepalive Packets Dropped : 0

Now if we reactivate "lag256" interface, we will se that everything will come back again. But for individual interfaces in a mlag to come up we need to wait at least 180 seconds. This is the timer value which ensures that everything is in sync, before we start processing traffic on those ports again. If required we can reduce this timer as below (needed to be done in both primary and secondary switches) -

vsx

linkup-delay-timer 5

Conclusion

This concludes our example of how to configure VSX in Aruba CX series switches. For further reading I highly recommend reading below -

1. ArubaOS-CX 10.04 Virtual Switching Extension (VSX) Guide

Comments

Post a Comment